History of the Term AI

With so much growing interest and fear around AI, it may be helpful to know some history around the term, how it came to be — and why it keeps getting buzzed about. Now nearly 70 years old, “artificial intelligence” was actually first coined due to the most uniquely human of motives: Ego and competitiveness.

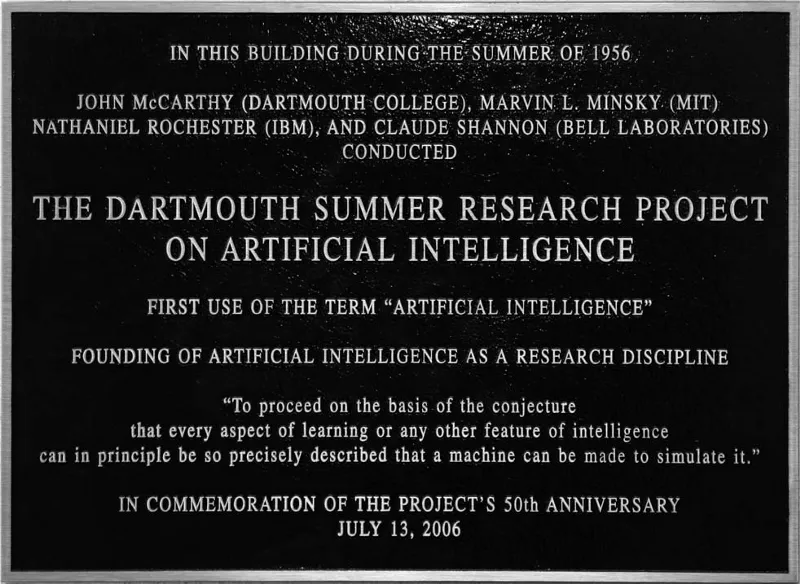

It was in the Summer of 1955 in New England. Computer scientist John McCarthy of Dartmouth was preparing a research proposal with Marvin Minsky (Harvard), Nathaniel Rochester (IBM), and Claude Shannon (Bell Telephone Laboratories). At the time, their study would usually fall squarely in the category of “cybernetics”, i.e. automated systems. But that posed a problem.

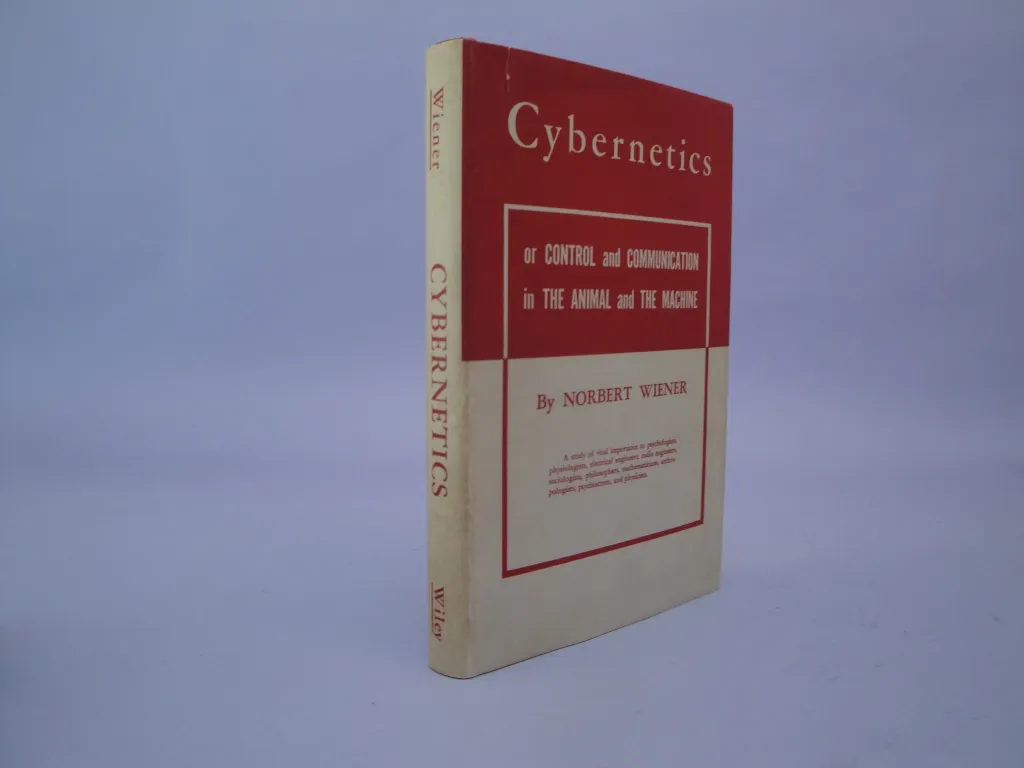

The discipline of cybernetics was first formulated in an incredibly influential book of the same name by Norbert Wiener, a professor at nearby MIT. Much of his early cybernetics work focused on trying to control and automate systems. And while we rightly worry about the use of AI-guided weaponry now, it was Wiener who created one of the very first automated weapon systems — way back during World War II.

With the rise of the Cold War, Wiener became an incredibly influential — many say domineering — force around any conversation about the future of computing. That was often quite literally the case: If there was a cybernetics conference scheduled near him in Cambridge, Norbert was notorious for showing up in person and lecturing everyone at length. How to hold an academic conference around computing, but keep Wiener from coming?

Simple: Come up with another term, and use that to advertise the conference.

“One reason for inventing the term [AI] was to escape association with cybernetics,” McCarthy once bluntly explained. “I wished to avoid having either to accept Norbert Wiener as a guru or having to argue with him.”

So in a very real sense, AI started out as a term of distraction, not clarity. Created to position itself apart from cybernetics, it was coined so broadly that roughly any automated computer system can be called artificial intelligence.

It’s why “AI” remained such a compelling marketing term through the decades. The explosive growth of ChatGPT since 2022 has generated such excitement, it’s easy to forget another AI-related product enjoyed similar buzz less than a decade ago.

But it’s true: back in 2014, AI hype orbited around voice-activated personal assistants, with Apple’s Siri, Amazon’s Alexa, and Microsoft’s Cortana leading the charge. As with other “truthy” technology, Hollywood turbo-charged this excitement, with Jarvis from the Iron Man movies and the Scarlet Johannsen-voiced AI depicted in Her. “Personal assistant AI is going to change everything!” briefly became the grand pronouncement for that time (almost 10 years from the date of this op-ed).

Back then, I began getting pitches from large companies about designing what seemed like magic systems with sprinkles of “AI” on top. When probed further, however, it appeared that the executives behind these proposals were not actually interested in artificial intelligence, but in making sure their product offerings seemed fresh and competitive. They’d read in various pop tech magazines that AI was something they needed to pay attention to, but they hadn’t looked deeply into it. As a result, they became impatient when I tried to take them through the details of potential implementations.

We do continue to use voice-driven assistants, of course, but in narrow contexts where it’s genuinely useful — like map guides while we’re driving. AI can be clever and witty in movies, but in real life we quickly realized it’s an anemic representation of the human soul.

It’s always the same pattern: every so often, new AI applications and play toys emerge from breakthroughs at research universities. These cause bursts of excitement among people at the periphery, particularly marketers and well-paid evangelists, who then create inflated expectations around them. These expectations blow way past what’s technically possible for these systems, but company heads ask engineers to do the impossible anyway. And the AI applications continue doing only what they’re good at: nerdy automation tasks. The public feels let-down again.

If there’s any way out of this frustrating cycle, it might be necessary to undo the confusion inadvertently caused by John McCarthy back in the 50s. Inevitably, the very term “artificial intelligence” inspires us to think about longevity and the human soul, and heightens any fear around automation. People simply snap into a panic state instead of investigating closely.

A better term to replace “AI”? Perhaps “alongside technologies”. They’re less catchy, and maybe that’s the point. This stuff shouldn’t be so catchy. And they emphasize that we need human-guided systems for AI to work well.

After McCarthy’s first AI conference in 1956, attendees raised millions of dollars in grants to fulfill a modest goal: Create a machine as intelligent as a human. They expected to succeed within one generation. Two generations and several hype cycles later, it might become clear that the best automations focus on amplifying the best of humans and the best of machines — and that trying to make machines “human” will always be a losing proposition.

Related Reading

- The article modified from the original Inside the Very Human Origin of the Term Artificial Intelligence by Amber Case]

- Alongside Technologies

- History of Cybernetics

- LLMs

- Truth vs. Truthy