Difference between revisions of "Affective Computing"

Caseorganic (Talk | contribs) |

Caseorganic (Talk | contribs) |

||

| Line 10: | Line 10: | ||

--- | --- | ||

| − | |||

Cite this correctly! | Cite this correctly! | ||

Revision as of 00:09, 27 November 2010

Learning the Language of Machines

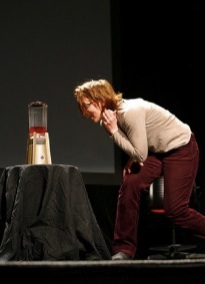

Instead of teaching machines to understand humans, MIT’s Kelly Dobson programmed a blender to understand voice activation, but not the typical voice one uses. Instead of saying “Blender, ON!”, she made an auditory model of a machine voice.

If she wants the blender to begin, she simply growls at it. The low-pitched “Rrrrrrrrr” she makes turns the blender on low. If she wants to increase the speed of the machine, she increases her voice to “RRRRRRRRRRR!”, and the machine increases in intensity. This way, the machine can understand volume and velocity, instead of a human voice. Why would a machine need to understand a human command when it can understand a command much more similar to its own human language?

See: Media Lab at MIT