Difference between revisions of "Mediated Reality"

Caseorganic (Talk | contribs) |

Caseorganic (Talk | contribs) |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

===Definition=== | ===Definition=== | ||

| − | Mediated reality is a term coined by [[Steve Mann]] to describe a mix of virtual information with visual information from the real world. | + | Mediated reality is a term coined by [[Steve Mann]] to describe a mix of virtual information with visual information from the real world. "Mediated Reality is the technological process by which the WearComp user can mediate all visual input and output. Mediation includes overlaying virtual objects on "real life", and taking away or otherwise visually altering objects".<ref>Mann, Steve and Hal Niedzviecki. [[Cyborg: Digital Destiny and Human Possibility in the Age of the Wearable Computer]]. 2001. Pg. 265.</ref> |

{{clear}} | {{clear}} | ||

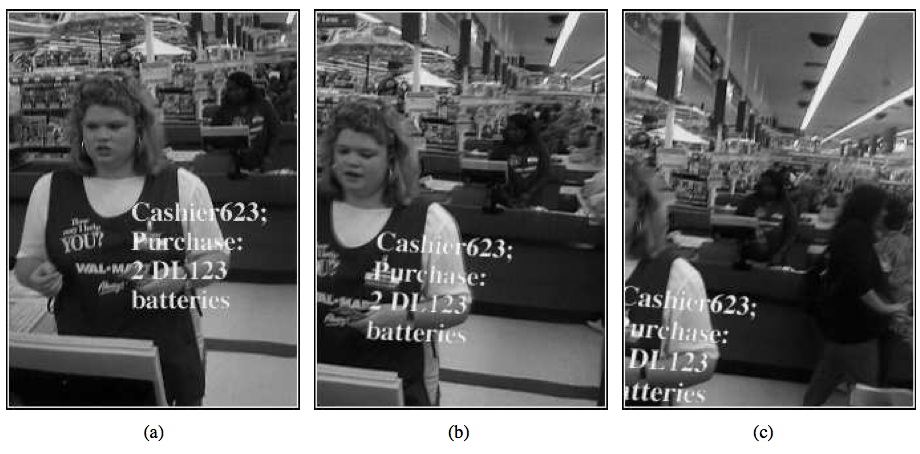

| − | [[Image:mediated-reality-steve-mann-notes.jpg|thumb|center| | + | [[Image:mediated-reality-steve-mann-notes.jpg|thumb|center|700px|"Mediated reality as a photographic/videographic memory prosthesis: (a) Wearable face– recognizer with virtual “name tag” (and grocery list) appears to stay attached to the cashier (b), even when the cashier is no longer within the field of view of the tapped eye and transmitter (c)".<ref>[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.62.3899&rep=rep1&type=pdf VideoOrbits on Eye Tap devices for deliberately Diminished Reality or altering the visual perception of rigid planar patches of a real world scene] (PDF download) by Steve Mann and James Fung. University of Toronto, 10 King’s College Road, Toronto, Canada.</ref>]] |

{{clear}} | {{clear}} | ||

| − | According to [[Steve Mann]], "mediated Reality is created when virtual, or computer generated, information is mixed with what what the user would otherwise normally see. Eye Tap is suitable for creating a mediated reality because it is able to absorb, quantify, and resynthesize the light the user would normally see. When the light is resynthesized under computer control, information can be added or removed from the scene before it is presented to the user. The virtual information or light as seen through the display must be properly registered and aligned within the user's field of view. To achieve this, a method of camera-based head-tracking | + | According to [[Steve Mann]], "mediated Reality is created when virtual, or computer generated, information is mixed with what what the user would otherwise normally see. Eye Tap is suitable for creating a mediated reality because it is able to absorb, quantify, and resynthesize the light the user would normally see. When the light is resynthesized under computer control, information can be added or removed from the scene before it is presented to the user. The virtual information or light as seen through the display must be properly registered and aligned within the user's field of view. To achieve this, a method of camera-based head-tracking"<ref>Ibid.</ref> is employed. |

| + | |||

{{clear}} | {{clear}} | ||

===Related Reading=== | ===Related Reading=== | ||

| Line 17: | Line 18: | ||

[[Category:Book Pages]] | [[Category:Book Pages]] | ||

| − | [[Category: | + | [[Category:Finished]] |

__NOTOC__ | __NOTOC__ | ||

Latest revision as of 00:33, 31 July 2011

Definition

Mediated reality is a term coined by Steve Mann to describe a mix of virtual information with visual information from the real world. "Mediated Reality is the technological process by which the WearComp user can mediate all visual input and output. Mediation includes overlaying virtual objects on "real life", and taking away or otherwise visually altering objects".[1]

According to Steve Mann, "mediated Reality is created when virtual, or computer generated, information is mixed with what what the user would otherwise normally see. Eye Tap is suitable for creating a mediated reality because it is able to absorb, quantify, and resynthesize the light the user would normally see. When the light is resynthesized under computer control, information can be added or removed from the scene before it is presented to the user. The virtual information or light as seen through the display must be properly registered and aligned within the user's field of view. To achieve this, a method of camera-based head-tracking"[3] is employed.

Related Reading

References

- ↑ Mann, Steve and Hal Niedzviecki. Cyborg: Digital Destiny and Human Possibility in the Age of the Wearable Computer. 2001. Pg. 265.

- ↑ VideoOrbits on Eye Tap devices for deliberately Diminished Reality or altering the visual perception of rigid planar patches of a real world scene (PDF download) by Steve Mann and James Fung. University of Toronto, 10 King’s College Road, Toronto, Canada.

- ↑ Ibid.